About CoSInES

The CoSInES project brings together a world class team of researchers from Universities of Warwick, Bristol, Lancaster, Oxford and the Alan Turing Institute to tackle fundamental challenges in Computational Statistics and key applications from Engineering and Security. The project will run from 1st October 2018 - 30th September 2023.

The 21st century data revolution provides exciting opportunities for enhancing understanding of the world in virtually every area of human activity. However the process of moving from data to scientific understanding is often challenging. Statistical science provides a principled framework for this process which should be contrasted with that of pure prediction central to numerous machine learning procedures. However, as the size and complexity of modern data sets continues to increase, and the ambition of the understanding sought from the data also expands, fresh challenges emerge to the implementation of formal statistical approaches. While 20th century computational statistical methodologies (often based around Markov chain and Sequential Monte Carlo methods and their variants) have brought complex problems within the grasp of classical and Bayesian model-based paradigms, today’s complex problems pose immense challenges for principled statistical methods. Our vision is to bridge the gap between Statistical Science and the most challenging inferential problems posed by Data Science.

While we will create generic methodology having widespread application in many areas, we will take motivation and place major emphases on the important emerging areas of Data-centric Engineering and Defence & Security. We will foster a research collaboration with strong synergies between on the one hand, statistical theory, algorithms and methodology; and on the other hand substantive problems of great societal interest within engineering and security. The problems that CoSInES will tackle will be characterised by complex large data sets indexed in space and often continuously in time. New models will require not only spatial components, but also large network and time-series structure often requiring the solutions to (stochastic) differential equations. Inference will require a completely new generation of algorithms likely to require complicated Monte Carlo and deterministic approaches. Algorithms will need to be tailored for particular computer architecture, and will require underpinning theory to show they scale. New theory on computational and statistical robustness of approaches developed will be required to ensure the usefulness of the procedures developed.

See News for highlighted papers published by members of the CoSInES project and positions announcements.

Our Research

Our research focuses on the following themes, and a list of recent references is provided in the page of research outputs .

Scalable Statistical and Computational Methodology

There now exists a huge array of sophisticated MCMC, SMC, ABC, EM and more algorithms proposed to tackle high-dimensional likelihood-based statistical problems. Many are themselves approximate, or admit natural computationally less expensive approximations. While impressive advances in our understanding of these methodologies have been made this century, better understanding of the robustness, scalability and statistical and algorithmic efficiency is urgently required to underpin ever more complex applications. Moreover, better understanding of the nature of approximate likelihood methods is required to more fully understand the trade- off between computational efficiency and exactness. Particular challenges exist for high-dimensional, often high-frequency, time-indexed structured data.

Developing principled statistical approaches to big data

The first algorithms for Bayesian inference for big data have emerged during the last 5 years, although many involve approximations which are difficult to quantify outside the proximity of Gaussianity, and exact methods are currently extremely complex and often slow. Applications demand methods which are robust to non-Gaussianity and practically implementable for a wide range of big data contexts.

Synergising modern computational architectures with Computational Statistics

Some progress in the design of parallel and distributed statistical algorithms has been made in the last few years. However, architectural considerations and high- performance implementations have often been viewed as a subsidiary engineering challenge, and there is a lack of robust and mature algorithmic approaches and performant code. This is in stark contrast to the continued development of much-needed, architecturally-informed packages from other areas in Data Science, some of which have revolutionised the practice of data analysis and driven theoretical and methodological innovation.

Underpinning theory and methodology for optimising high- and infinite-dimensional algorithms

The theoretical understanding of the scaling complexity properties of MCMC and SMC methods is of vital importance for guiding practice, for example in algorithm choice, optimal scaling, deciding how to initialise and terminate runs). The area has made great strides in recent years, although still relatively little is known about highly heterogenous statistical problems which will typically be encountered within CoSInES.

Addressing statistical and computational problems associated with model misspecification

Under model misspecification, a major challenge is to develop statistical approaches that retain the benefits of prob- abilistic models while maintaining computational tractability and robustness to misspecification. More- over, it is extremely common to find MCMC methods to be robust and efficient for simulated data, only to suddenly mix badly for real data. However this phenomenon is not well-understood and there is a dearth of theory describing how MCMC convergence for Bayesian posterior distributions depends on the particular data set observed.

Enabling statistical inference under privacy constraints

Within both commercial and security contexts, it is increasingly common for data to only be partially available to statisticians due to confidentiality and privacy constraints. Carrying out statistical inference from encrypted data has become a realistic aim due to the introduction of homomorphic encryption and subsequent pioneering work. However currently only very simple statistical analysis is feasible, and new methods will require algorithmic developments to tailor closely with rapidly developing advancements in homomorphic encryption.

Targeted Applications

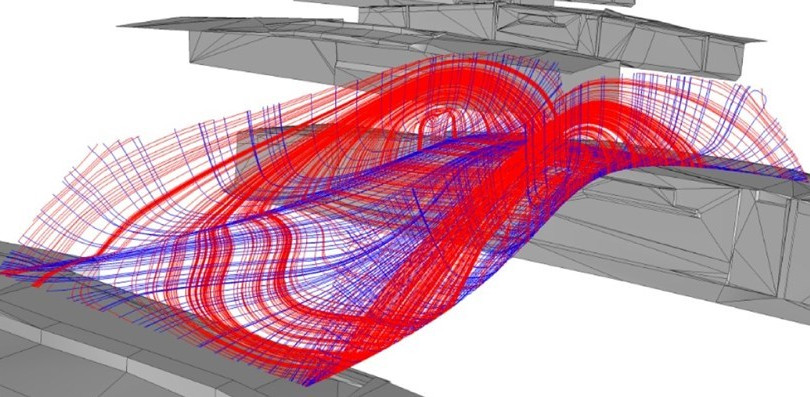

Data Centric Engineering

Across every sector of the engineering professions and the disciplines that underpin them there is a realisation of the increasing value of all forms of data and the central role it now plays in all of the design, manufacture, construction, commissioning, operation, maintenance and decommissioning of complex engineering systems and projects e.g. the recent Royal Academy of Engineering and Institution of Engineering and Technology report on driving productivity and innovation by connecting data. The Royal Society, along with the British Academy, also published a report on Data Management and Use with a specific case study on Smart Metering in the power supply industry provided. This is largely driven by the confluence of emerging technologies enabling measurement as never before considered feasible levels of precision, range, coverage, and volume of data become available. As such the statistical sciences are the essential collaborative driver for this disruptive change in engineering driven by data, and this programme is set to deliver essential advances to realise this potential.

Defence and Security

As individuals generate more data and devise new ways to interact, propagate, receive, and relate to one another, in an increasingly digital world, the security authorities face a set of dynamic and challenging tasks in keeping the UK secure. The data that people individually and collectively produce could potentially be used to understand a great deal about them. There is a need to understand what this data can, and cannot, reliably tell us for understanding and optimising security strategies, allowing a deep understanding of an individual and group’s intent, whilst simultaneously respecting citizens’ privacy. With respect to this latter point, the development of inference procedures that are privacy preserving is crucial as regulation evolves. For these reason, the development of new mathematical and statistical techniques that offer improved statistical insight is crucial to achieve total security in the UK.

Targeted Applications

Data Centric Engineering

Across every sector of the engineering professions and the disciplines that underpin them there is a realisation of the increasing value of all forms of data and the central role it now plays in all of the design, manufacture, construction, commissioning, operation, maintenance and decommissioning of complex engineering systems and projects e.g. the recent Royal Academy of Engineering and Institution of Engineering and Technology report on driving productivity and innovation by connecting data. The Royal Society, along with the British Academy, also published a report on Data Management and Use with a specific case study on Smart Metering in the power supply industry provided. This is largely driven by the confluence of emerging technologies enabling measurement as never before considered feasible levels of precision, range, coverage, and volume of data become available. As such the statistical sciences are the essential collaborative driver for this disruptive change in engineering driven by data, and this programme is set to deliver essential advances to realise this potential.

Defence and Security

As individuals generate more data and devise new ways to interact, propagate, receive, and relate to one another, in an increasingly digital world, the security authorities face a set of dynamic and challenging tasks in keeping the UK secure. The data that people individually and collectively produce could potentially be used to understand a great deal about them. There is a need to understand what this data can, and cannot, reliably tell us for understanding and optimising security strategies, allowing a deep understanding of an individual and group’s intent, whilst simultaneously respecting citizens’ privacy. With respect to this latter point, the development of inference procedures that are privacy preserving is crucial as regulation evolves. For these reason, the development of new mathematical and statistical techniques that offer improved statistical insight is crucial to achieve total security in the UK.